Both solid-state device manufacturers and artificial intelligence researchers lead a symbiotic existence in which the two disparate sectors support one another. It's not an exaggeration to say that the modern AI boom couldn't have taken place without access to sophisticated microchip technology. Early hollow state machines based around vacuum tubes couldn't possibly run the latest large language models at anything close to reasonable speeds.

On the other hand, computer industry design engineers are increasingly relying on AI-based tools to design new packages. As integrated circuits have gotten smaller, the sheer number of connections that need to get placed in a certain amount of space has gone up exponentially. That has led many professionals to use AI-design software that can dramatically speed up existing workflows.

Building an Integrated AI Toolchain

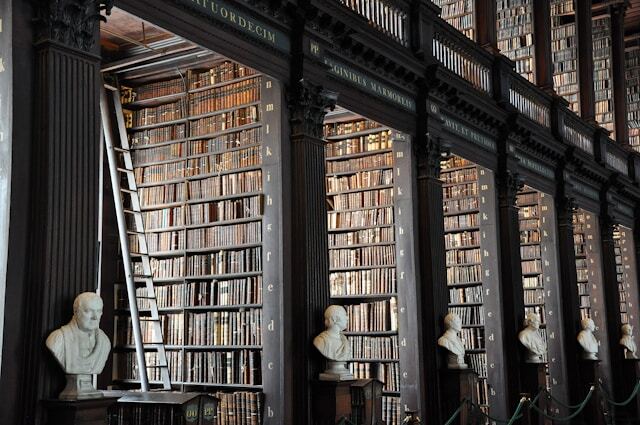

Engineering teams wouldn't be able to design modern IC packages if they didn't have a large library of older schematics. Tracing a particular design lineage is an excellent way to see how people solved many of today's problems at some point in the past. Unfortunately, this kind of research is quite slow. Training AI models on a huge corpus of documents that lay out the architecture of various microprocessors can help by providing engineers with a new way to search a database.

Rather than try to do so manually, they can instead prompt an intelligent agent to craft additional designs based on an agreed-upon matrix. While it's unlikely that an AI could design something that works entirely on its own, human engineers can pick over these autonomously generated schematics and suggest changes. This kind of software mirrors some of the new tracking applications found embedded in logistics and communications equipment, which leverage the same innovations.

These programs also seem to foreshadow the development of new IC devices that may be just over the horizon.

Coming Innovations in the Semiconductor Market

Some of the most promising research isn't focused on how AI can change the design of solid-state products. Rather, it illustrates the influence of semiconductor engineering on AI deployment itself. Until recently, it seemed that the number of transistors on a particular device would double every few years. This was often referred to as Moore's Law, and it seemed like innovation in this sector would never stop.

Advances in particle physics have made it possible to construct devices that are so small it's not feasible to shove any more transistors on top of them. This threatens to make mainframe servers designed to run LLM software unmanageably large. Semiconductor engineers are responding by designing distributed systems that can spread the duty of running these programs out over multiple locations. Ironically, many of the same scaling AI tools these networks were built to run are also helping to educate engineers on how to work with their solutions.

As consumer interest in LLM and machine learning technology continues to grow, there's going to be an ever-increasing need for more hardware on which to run all these applications. Semiconductor industry organizations are already ramping up production to fill this gap.